5.27.4. OpenStack Neutron Density Testing¶

| status: | ready |

|---|---|

| version: | 1.0 |

| Abstract: | With density testing we are able to launch many instances on a single OpenStack cluster. But except obtaining high numbers we also would like to ensure that all instances are properly wired into the network and, which is more important, have connectivity with public network. |

5.27.4.1. Test Plan¶

The goal of this test is to launch as many as possible instances in the OpenStack cloud and verify that all of them have correct connectivity with public network. Upon start each instance reports itself to the external monitoring server. On success server logs should contain instances’ IPs and a number of attempts to get IP from metadata and send it to the server.

5.27.4.1.1. Test Environment¶

5.27.4.1.1.1. Preparation¶

This test plan is performed against existing OpenStack cloud. The monitoring server is deployed on a machine outside of the cloud.

During each iteration instances are created and connected to the same Neutron network, which is plugged into Neutron router that connects OpenStack with external network. The case with multiple Neutron networks being used may also be considered.

5.27.4.1.1.2. Environment description¶

The environment description includes hardware specification of servers, network parameters, operation system and OpenStack deployment characteristics.

5.27.4.1.1.2.1. Hardware¶

This section contains list of all types of hardware nodes.

| Parameter | Value | Comments |

| model | e.g. Supermicro X9SRD-F | |

| CPU | e.g. 6 x Intel(R) Xeon(R) CPU E5-2620 v2 @ 2.10GHz | |

| role | e.g. compute or network |

5.27.4.1.1.2.2. Network¶

This section contains list of interfaces and network parameters. For complicated cases this section may include topology diagram and switch parameters.

| Parameter | Value | Comments |

| network role | e.g. provider or public | |

| card model | e.g. Intel | |

| driver | e.g. ixgbe | |

| speed | e.g. 10G or 1G | |

| MTU | e.g. 9000 | |

| offloading modes | e.g. default |

5.27.4.1.1.2.3. Software¶

This section describes installed software.

| Parameter | Value | Comments |

| OS | e.g. Ubuntu 14.04.3 | |

| OpenStack | e.g. Mitaka | |

| Hypervisor | e.g. KVM | |

| Neutron plugin | e.g. ML2 + OVS | |

| L2 segmentation | e.g. VxLAN | |

| virtual routers | e.g. DVR |

5.27.4.1.2. Test Case 1: VM density check¶

5.27.4.1.2.1. Description¶

The goal of this test is to launch as many as possible instances in the OpenStack cloud and verify that all of them have correct connectivity with public network. Instances can be launched in batches (i.e. via Heat). When instance starts it sends IP to monitoring server located outside of the cloud.

The test is treated as successful if all instances report their status. As an extension for this test plan reverted test case might be taken into account: when external resource is trying to connect to each VM using floating IP address. This should be treated as a separate test case.

5.27.4.1.2.2. List of performance metrics¶

| Priority | Value | Measurement Units | Description |

|---|---|---|---|

| 1 | Total | count | Total number of instances |

| 1 | Density | count per compute node | Max instances per compute node |

5.27.4.1.3. Tools¶

To execute the test plan:

- Disable quotas in OpenStack cloud (since we are going far beyond limits that are usually allowed):

#!/usr/bin/env bash #========================================================================== # Unset quotas for main Nova and Neutron resources for a tenant # with name $OS_TENANT_NAME. # Neutron quotas: floatingip, network, port, router, security-group, # security-group-rule subnet. # Nova quotas: cores, instances, ram, server-groups, server-group-members. # # Usage: unset_quotas.sh #========================================================================== set -e NEUTRON_QUOTAS=(floatingip network port router security-group security-group-rule subnet) NOVA_QUOTAS=(cores instances ram server-groups server-group-members) OS_TENANT_ID=$(openstack project show $OS_TENANT_NAME -c id -f value) echo "Unsetting Neutron quotas: ${NEUTRON_QUOTAS[@]}" for net_quota in ${NEUTRON_QUOTAS[@]} do neutron quota-update --"$net_quota" -1 $OS_TENANT_ID done echo "Unsetting Nova quotas: ${NOVA_QUOTAS[@]}" for nova_quota in ${NOVA_QUOTAS[@]} do nova quota-update --"$nova_quota" -1 $OS_TENANT_ID done echo "Successfully unset all quotas" openstack quota show $OS_TENANT_ID

- Configure a machine for monitoring server. Copy the script into it:

#!/usr/bin/python # This script setups simple HTTP server that listens on a given port. # When a server is started it creates a log file with name in the format # "instance_<timestamp>.txt". Save directory is also configured # (defaults to /tmp). # Once special incoming POST request comes this server logs it # to the log file." import argparse from BaseHTTPServer import BaseHTTPRequestHandler from BaseHTTPServer import HTTPServer from datetime import datetime import logging import os import sys import json LOG = logging.getLogger(__name__) FILE_NAME = "instances_{:%Y_%m_%d_%H:%M:%S}.txt".format(datetime.now()) class PostHandler(BaseHTTPRequestHandler): def do_POST(self): try: data = self._receive_data() except Exception as err: LOG.exception("Failed to process request: %s", err) raise else: LOG.info("Incoming connection: ip=%(ip)s, %(data)s", {"ip": self.client_address[0], "data": data}) def _receive_data(self): length = int(self.headers.getheader('content-length')) data = json.loads(self.rfile.read(length)) # Begin the response self.send_response(200) self.end_headers() self.wfile.write("Hello!\n") return data def get_parser(): parser = argparse.ArgumentParser() parser.add_argument('-l', '--log-dir', default="/tmp") parser.add_argument('-p', '--port', required=True) return parser def main(): # Parse CLI arguments args = get_parser().parse_args() file_name = os.path.join(args.log_dir, FILE_NAME) # Set up logging logging.basicConfig(format='%(asctime)s %(levelname)s:%(message)s', level=logging.INFO, filename=file_name) console = logging.StreamHandler(stream=sys.stdout) console.setLevel(logging.INFO) formatter = logging.Formatter('%(asctime)s %(levelname)s:%(message)s') console.setFormatter(formatter) logging.getLogger('').addHandler(console) # Initialize and start server server = HTTPServer(('0.0.0.0', int(args.port)), PostHandler) LOG.info("Starting server on %s:%s, use <Ctrl-C> to stop", server.server_address[0], args.port) try: server.serve_forever() except KeyboardInterrupt: LOG.info("Server terminated") except Exception as err: LOG.exception("Server terminated unexpectedly: %s", err) raise finally: logging.shutdown() if __name__ == '__main__': main()Copy Heat teamplate:

heat_template_version: 2013-05-23 description: Template to create multiple instances. parameters: image: type: string description: Image used for servers flavor: type: string description: flavor used by the servers default: m1.micro constraints: - custom_constraint: nova.flavor public_network: type: string label: Public network name or ID description: Public network with floating IP addresses. default: admin_floating_net instance_count: type: number description: Number of instances to create default: 1 server_endpoint: type: string description: Server endpoint address cidr: type: string description: Private subnet CIDR resources: private_network: type: OS::Neutron::Net private_subnet: type: OS::Neutron::Subnet properties: network_id: { get_resource: private_network } cidr: { get_param: cidr } dns_nameservers: - 8.8.8.8 router: type: OS::Neutron::Router properties: external_gateway_info: network: { get_param: public_network } router-interface: type: OS::Neutron::RouterInterface properties: router_id: { get_resource: router } subnet: { get_resource: private_subnet } server_security_group: type: OS::Neutron::SecurityGroup properties: rules: [ {remote_ip_prefix: 0.0.0.0/0, protocol: tcp, port_range_min: 1, port_range_max: 65535}, {remote_ip_prefix: 0.0.0.0/0, protocol: udp, port_range_min: 1, port_range_max: 65535}, {remote_ip_prefix: 0.0.0.0/0, protocol: icmp}] policy_group: type: OS::Nova::ServerGroup properties: name: nova-server-group policies: [anti-affinity] server_group: type: OS::Heat::ResourceGroup properties: count: { get_param: instance_count} resource_def: type: OS::Nova::Server properties: image: { get_param: image } flavor: { get_param: flavor } networks: - subnet: { get_resource: private_subnet } scheduler_hints: { group: { get_resource: policy_group} } security_groups: [{get_resource: server_security_group}] user_data_format: RAW user_data: str_replace: template: | #!/bin/sh -x RETRY_COUNT=${RETRY_COUNT:-10} RETRY_DELAY=${RETRY_DELAY:-3} for i in `seq 1 $RETRY_COUNT`; do instance_ip=`curl http://169.254.169.254/latest/meta-data/local-ipv4` [[ -n "$instance_ip" ]] && break echo "Retry get_instance_ip $i" sleep $RETRY_DELAY done for j in `seq 1 $RETRY_COUNT`; do curl -vX POST http://$SERVER_ENDPOINT:4242/ -d "{\"instance_ip\": \"$instance_ip\", \"retry_get\": $i, \"retry_send\": $j}" [ $? = 0 ] && break echo "Retry send_instance_ip $j" sleep $RETRY_DELAY done params: "$SERVER_ENDPOINT": { get_param: server_endpoint }

- Start the server:

python server.py -p <PORT> -l <LOG_DIR>The server writes logs about incoming connections into

/tmp/instance_<timestamp>.txtfile. Each line contains instance’s IP identifying which instance sent the report.Note

If the server is restarted, it will create a new “instance_<timestamp>.txt” file with new timestamp

- Provision VM instances:

Define number of compute nodes you have in the cluster. Let’s say this number is

NUM_COMPUTES.Make sure that

IMAGE_IDandFLAVORexist.Put address of monitoring server into

SERVER_ADDRESS.Run Heat stack using the template from above:

heat stack-create -f instance_metadata.hot \ -P "image=IMAGE_ID;flavor=FLAVOR;instance_count=NUM_COMPUTES;\ server_endpoint=SERVER_ADDRESS" STACK_NAMERepeat step 4 as many times as you need.

Each step monitor

instances_<timestamp>.txtusingwc -lto validate that all instances are booted and connected to the HTTP server.

5.27.4.1.4. Test Case 2: Additional integrity check¶

As an option, one more test can be run between density Test Case 1: VM density check and other researches on the environment (or between multiple density tests if they are run against the same OpenStack environment). The idea of this test is to create a group of resources and verify that it stays persistent no matter what other operations are performed on the environment (resources creation/deletion, heavy workloads, etc.).

5.27.4.1.4.1. Testing workflow¶

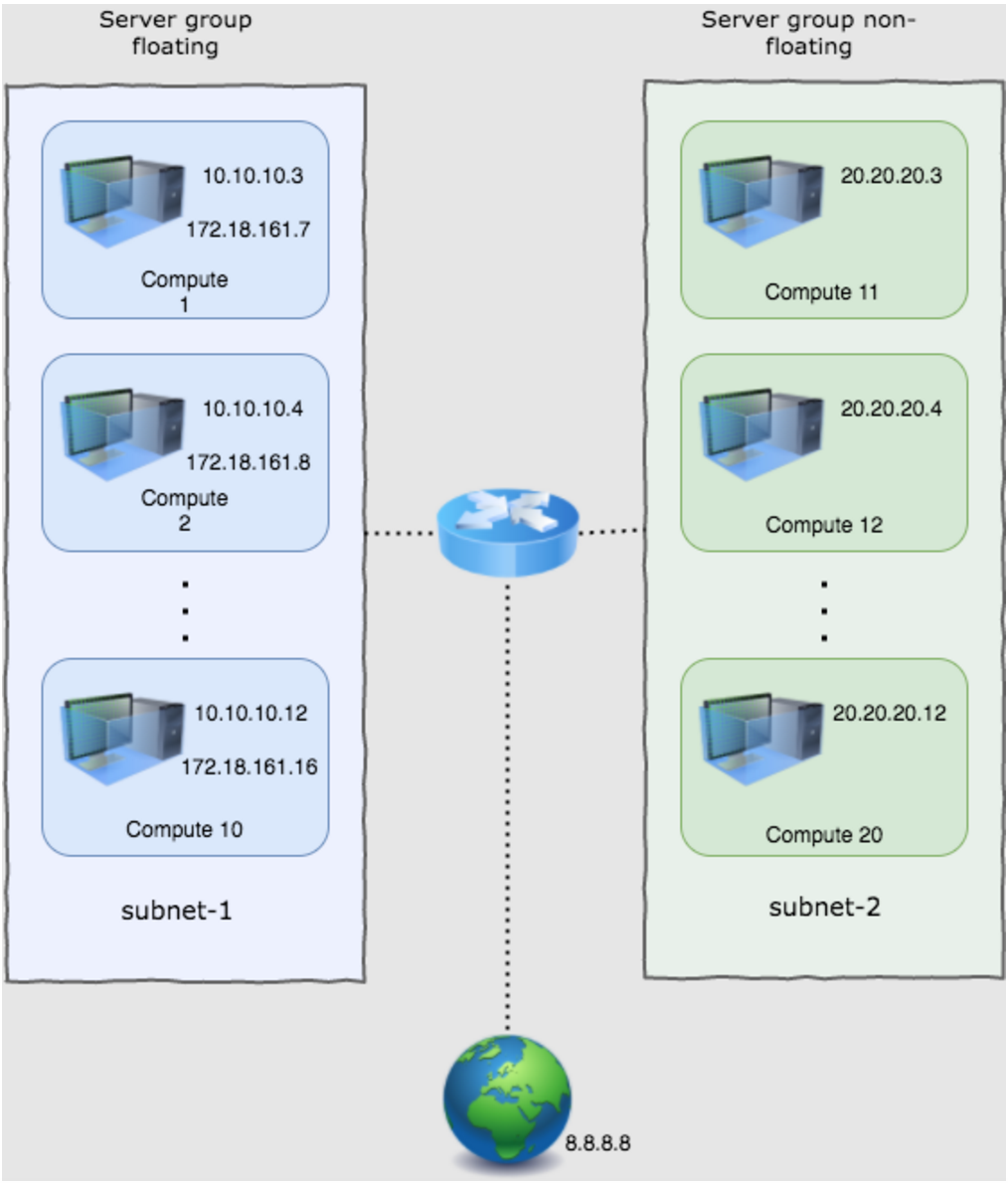

Create 20 instances in two server groups server-group-floating and server-group-non-floating in proportion 10:10, with each server group having the anti-affinity policy. Instances from different server groups are located in different subnets plugged into a router. Instances from server-group-floating have assigned floating IPs while instances from server-group-non-floating have only fixed IPs.

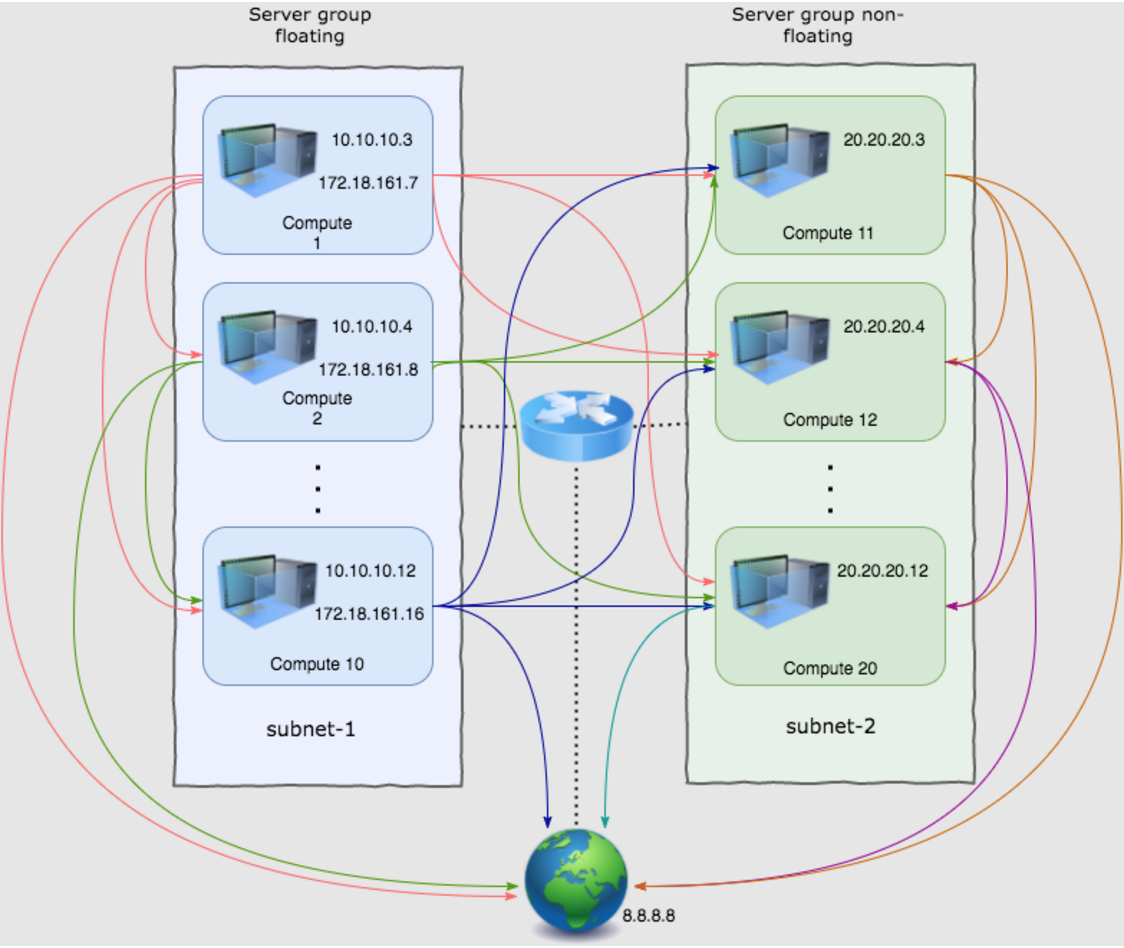

For each of the instances the following connectivity checks are made:

- SSH into an instance.

- Ping an external resource (eg. 8.8.8.8)

- Ping other VMs (by fixed or floating IPs)

Lists of IPs to ping from VM are formed in a way to check all possible combinations with minimum redundancy. Having VMs from different subnets with and without floating IPs ping each other and external resource (8.8.8.8) allows to check that all possible traffic routes are working, i.e.:

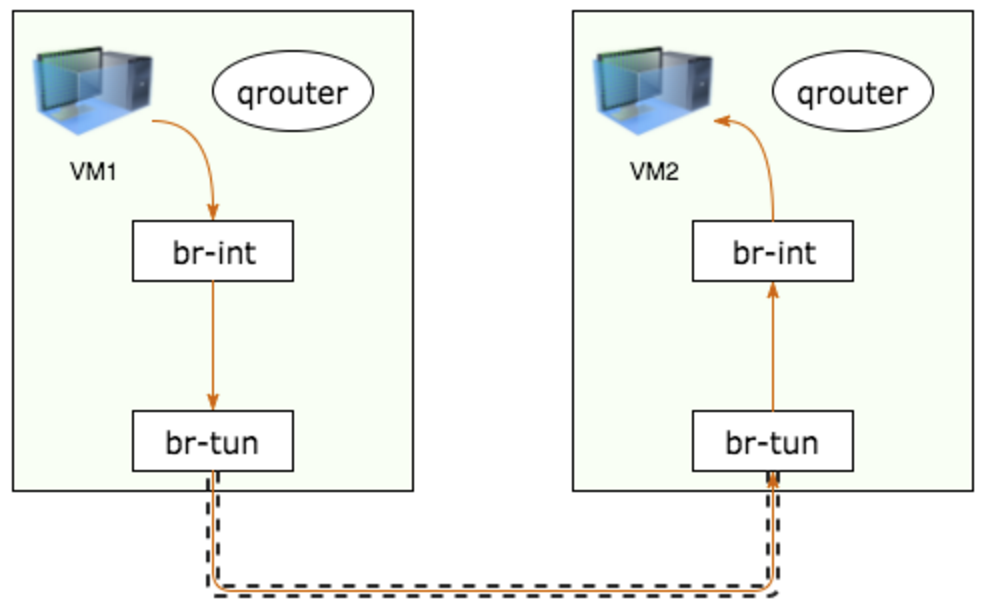

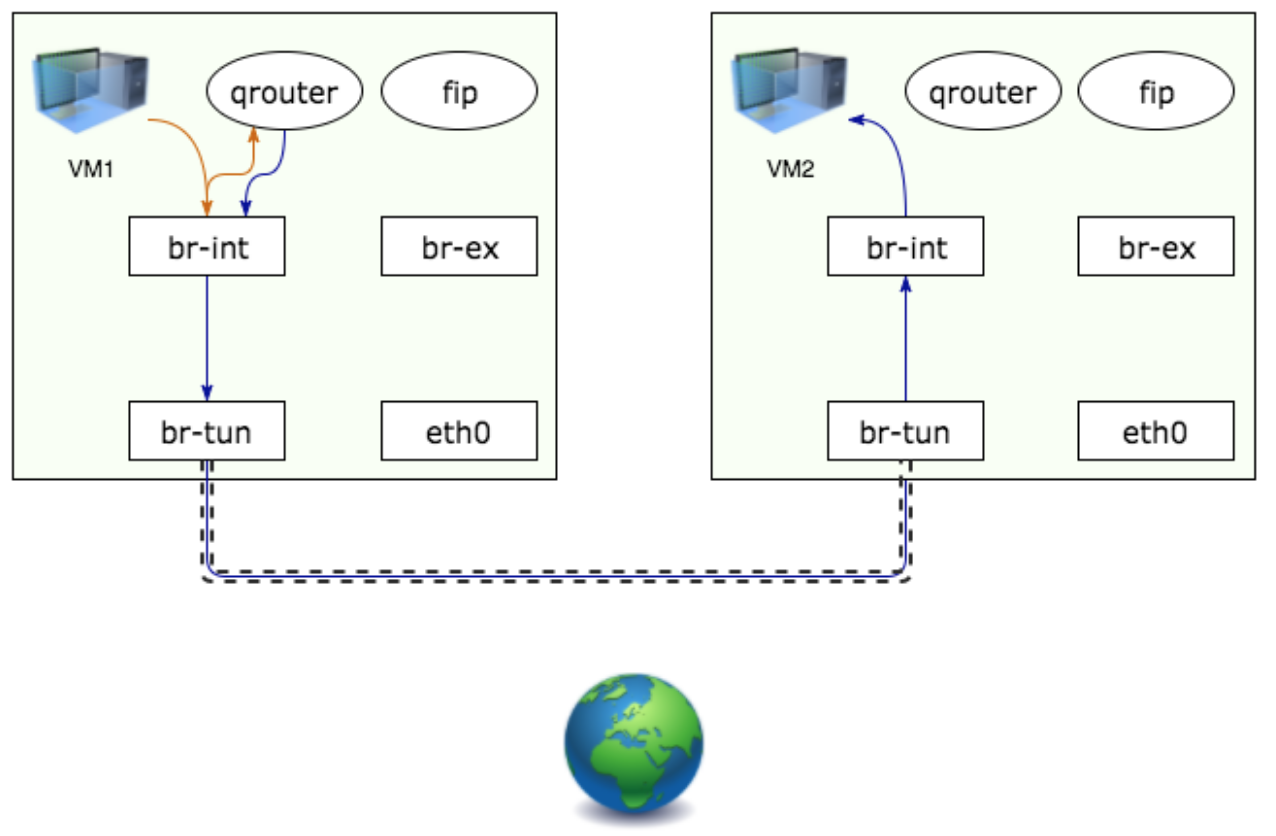

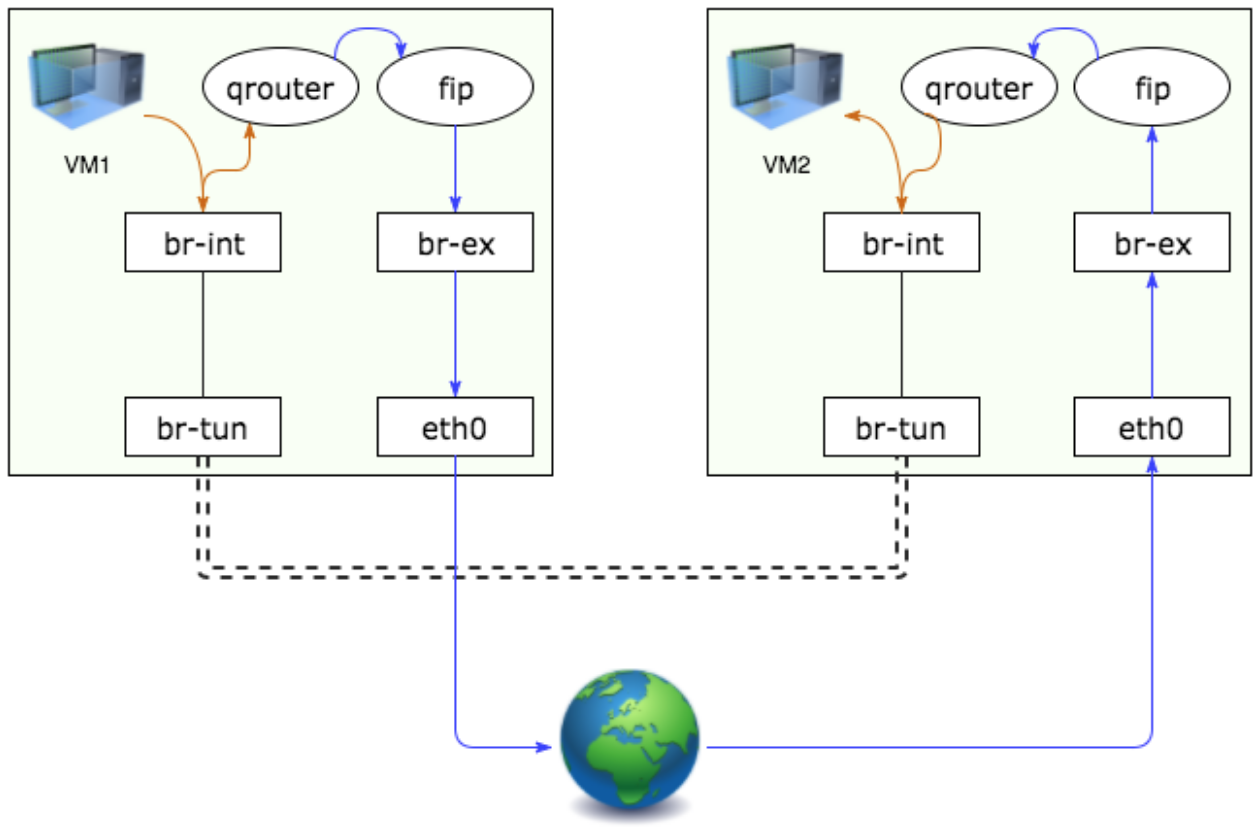

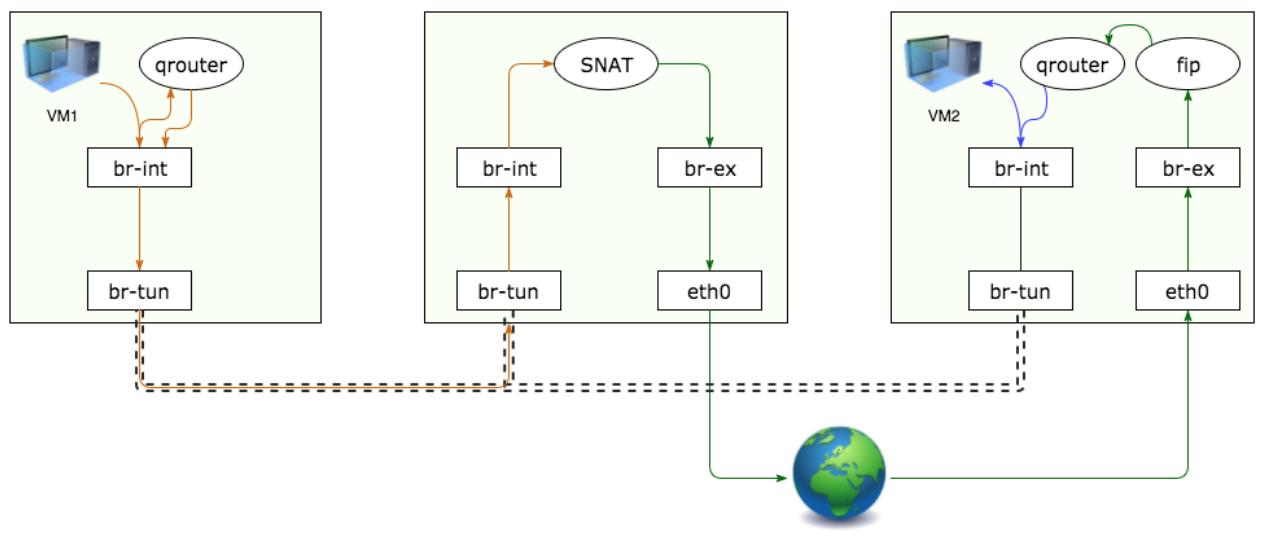

From fixed IP to fixed IP in the same subnet:

From fixed IP to fixed IP in different subnets:

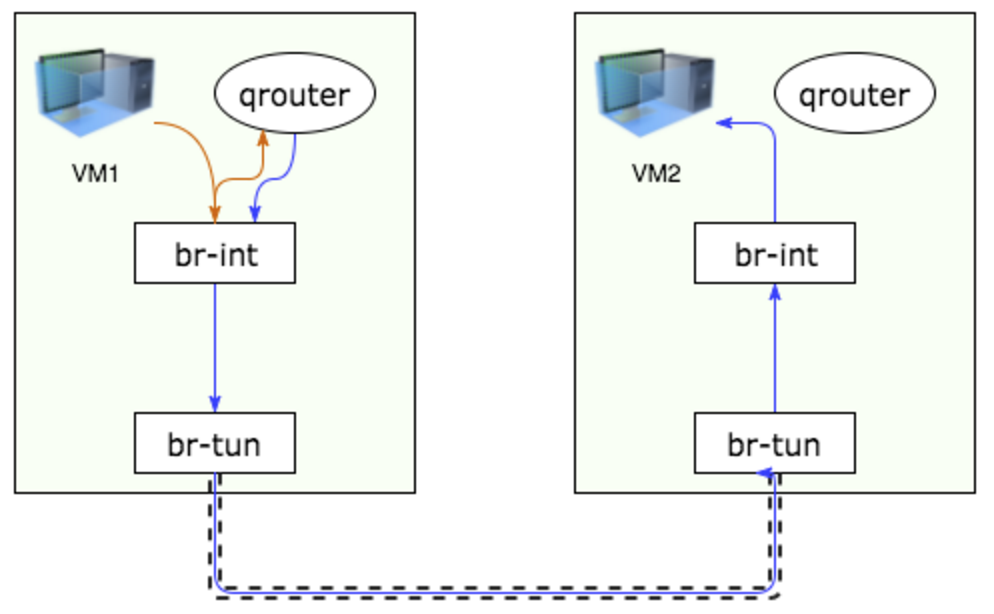

From floating IP to fixed IP (same path as in 2):

From floating IP to floating IP:

From fixed IP to floating IP:

5.27.4.1.4.2. Test steps¶

Create integrity stack using the following Heat template:

heat_template_version: 2013-05-23 description: Template to create multiple instances. parameters: image: type: string description: Image used for servers flavor: type: string description: flavor used by the servers default: m1.micro constraints: - custom_constraint: nova.flavor public_network: type: string label: Public network name or ID description: Public network with floating IP addresses. default: admin_floating_net instance_count_floating: type: number description: Number of instances to create default: 1 instance_count_non_floating: type: number description: Number of instances to create default: 1 resources: private_network: type: OS::Neutron::Net properties: name: integrity_network private_subnet_floating: type: OS::Neutron::Subnet properties: name: integrity_floating_subnet network_id: { get_resource: private_network } cidr: 10.10.10.0/24 dns_nameservers: - 8.8.8.8 private_subnet_non_floating: type: OS::Neutron::Subnet properties: name: integrity_non_floating_subnet network_id: { get_resource: private_network } cidr: 20.20.20.0/24 dns_nameservers: - 8.8.8.8 router: type: OS::Neutron::Router properties: name: integrity_router external_gateway_info: network: { get_param: public_network } router_interface_floating: type: OS::Neutron::RouterInterface properties: router_id: { get_resource: router } subnet: { get_resource: private_subnet_floating } router_interface_non_floating: type: OS::Neutron::RouterInterface properties: router_id: { get_resource: router } subnet: { get_resource: private_subnet_non_floating } server_security_group: type: OS::Neutron::SecurityGroup properties: rules: [ {remote_ip_prefix: 0.0.0.0/0, protocol: tcp, port_range_min: 1, port_range_max: 65535}, {remote_ip_prefix: 0.0.0.0/0, protocol: udp, port_range_min: 1, port_range_max: 65535}, {remote_ip_prefix: 0.0.0.0/0, protocol: icmp}] policy_group_floating: type: OS::Nova::ServerGroup properties: name: nova_server_group_floating policies: [anti-affinity] policy_group_non_floating: type: OS::Nova::ServerGroup properties: name: nova_server_group_non_floating policies: [anti-affinity] server_group_floating: type: OS::Heat::ResourceGroup properties: count: { get_param: instance_count_floating} resource_def: type: OS::Nova::Server properties: image: { get_param: image } flavor: { get_param: flavor } networks: - subnet: { get_resource: private_subnet_floating } scheduler_hints: { group: { get_resource: policy_group_floating} } security_groups: [{get_resource: server_security_group}] server_group_non_floating: type: OS::Heat::ResourceGroup properties: count: { get_param: instance_count_non_floating} resource_def: type: OS::Nova::Server properties: image: { get_param: image } flavor: { get_param: flavor } networks: - subnet: { get_resource: private_subnet_non_floating } scheduler_hints: { group: { get_resource: policy_group_non_floating} } security_groups: [{get_resource: server_security_group}]

Use this command to create a Heat stack:

heat stack-create -f integrity_check/integrity_vm.hot -P "image=IMAGE_ID;flavor=m1.micro;instance_count_floating=10;instance_count_non_floating=10" integrity_stack

Assign floating IPs to instances

assign_floatingips --sg-floating nova_server_group_floating

Run connectivity check

connectivity_check -s ~/ips.json

Note

~/ips.json is a path to file used to store instances’ IPs.

Note

Make sure to run this check only on controller with qdhcp namespace of integrity_network.

At the very end of the testing please cleaunup an integrity stack:

assign_floatingips --sg-floating nova_server_group_floating --cleanup heat stack-delete integrity_stack rm ~/ips.json

5.27.4.2. Reports¶

- Test plan execution reports: