6.2. Ceph RBD performance report¶

| Abstract: | This document includes Ceph RBD performance test results for 40 OSD nodes. Test cluster contain 40 OSD servers and forms 581TiB ceph cluster. |

|---|

6.2.1. Environment description¶

Environment contains 3 types of servers:

- ceph-mon node

- ceph-osd node

- compute node

| Role | Servers count | Type |

|---|---|---|

| compute | 1 | 1 |

| ceph-mon | 3 | 1 |

| ceph-osd | 40 | 2 |

6.2.1.1. Hardware configuration of each server¶

All servers have 2 types of configuration describing in table below

| server | vendor,model | Dell PowerEdge R630 |

| CPU | vendor,model | Intel,E5-2680 v3 |

| processor_count | 2 | |

| core_count | 12 | |

| frequency_MHz | 2500 | |

| RAM | vendor,model | Samsung, M393A2G40DB0-CPB |

| amount_MB | 262144 | |

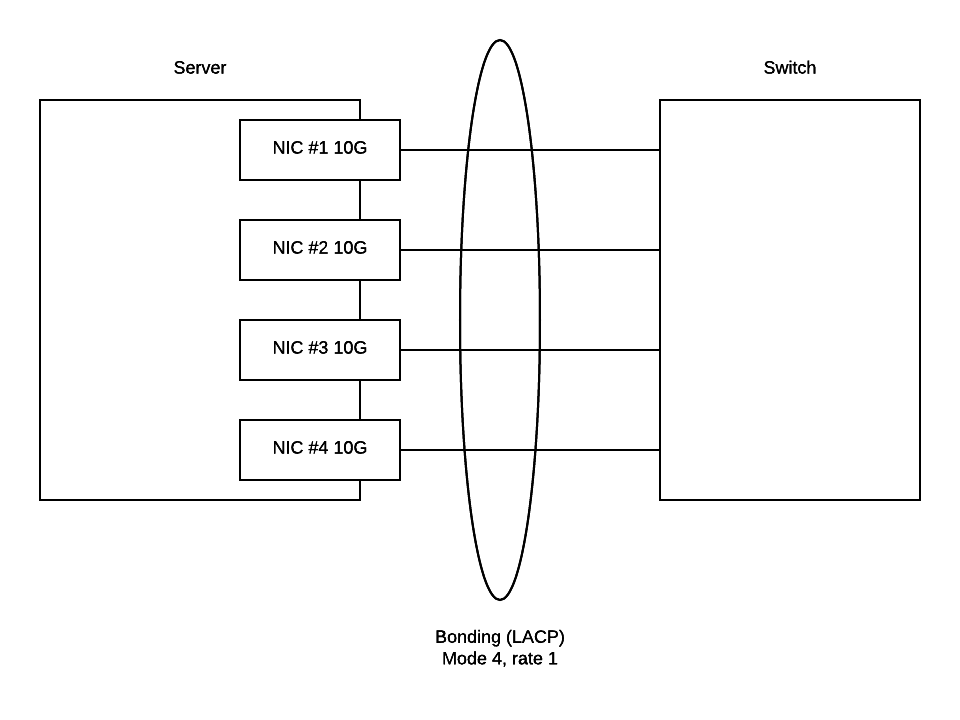

| NETWORK | interface_name s | eno1, eno2 |

| vendor,model | Intel,X710 Dual Port | |

| bandwidth | 10G | |

| interface_names | enp3s0f0, enp3s0f1 | |

| vendor,model | Intel,X710 Dual Port | |

| bandwidth | 10G | |

| STORAGE | dev_name | /dev/sda |

| vendor,model | raid1 - Dell, PERC H730P Mini

2 disks Intel S3610

|

|

| SSD/HDD | SSD | |

| size | 3,6TB |

| server | vendor,model | Lenovo ThinkServer RD650 |

| CPU | vendor,model | Intel,E5-2670 v3 |

| processor_count | 2 | |

| core_count | 12 | |

| frequency_MHz | 2500 | |

| RAM | vendor,model | Samsung, M393A2G40DB0-CPB |

| amount_MB | 131916 | |

| NETWORK | interface_names | enp3s0f0, enp3s0f1 |

| vendor,model | Intel,X710 Dual Port | |

| bandwidth | 10G | |

| interface_names | ens2f0, ens2f1 | |

| vendor,model | Intel,X710 Dual Port | |

| bandwidth | 10G | |

| STORAGE | vendor,model | 2 disks Intel S3610 |

| SSD/HDD | SSD | |

| size | 799GB | |

| vendor,model | 10 disks 2T | |

| SSD/HDD | HDD | |

| size | 2TB |

6.2.1.3. Software configuration on servers with controller, compute and compute-osd roles¶

Ceph was deployed by Decapod tool. Cluster config for decapod:

ceph_config.yaml

| Software | Version |

|---|---|

| Ceph | Jewel |

| Ubuntu | Ubuntu 16.04 LTS |

You can find outputs of some commands and /etc folder in the following archives:

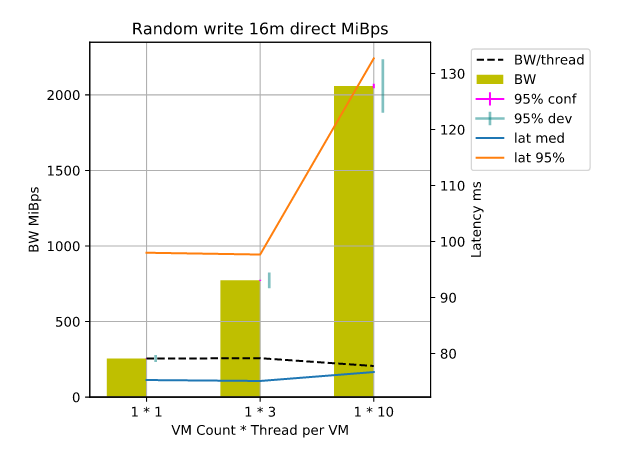

6.2.2. Testing process¶

- Run virtual machine on compute node with attached RBD disk.

- SSH into VM operation system

- Clone Wally repository.

- Create

ceph_raw.yamlfile in cloned repository - Run command python -m wally test ceph_rbd_2 ./ceph_raw.yaml

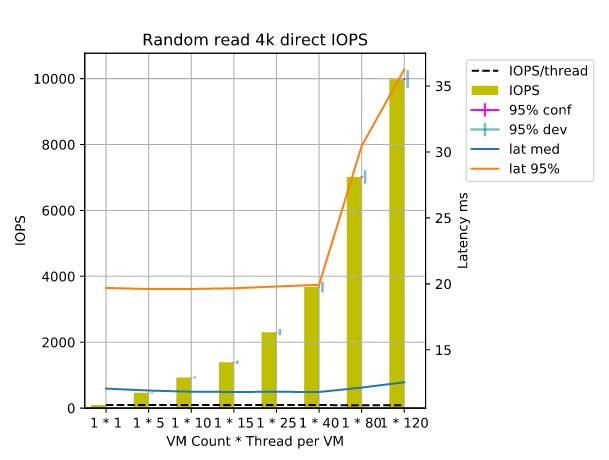

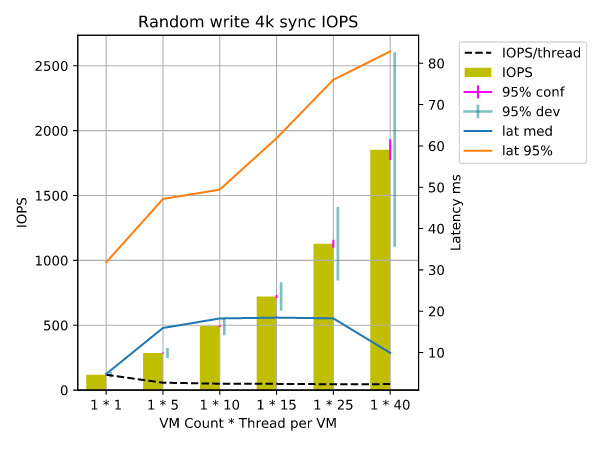

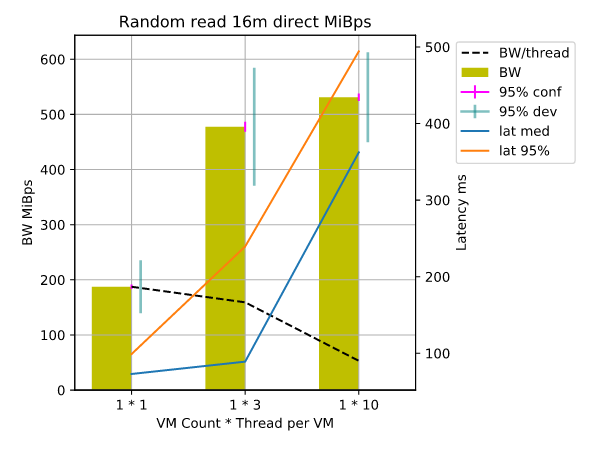

As a result we got the following HTML file: