[ English | русский | 한국어 (대한민국) | Deutsch | Indonesia | English (United Kingdom) | français ]

Пример маршрутизируемого окружения¶

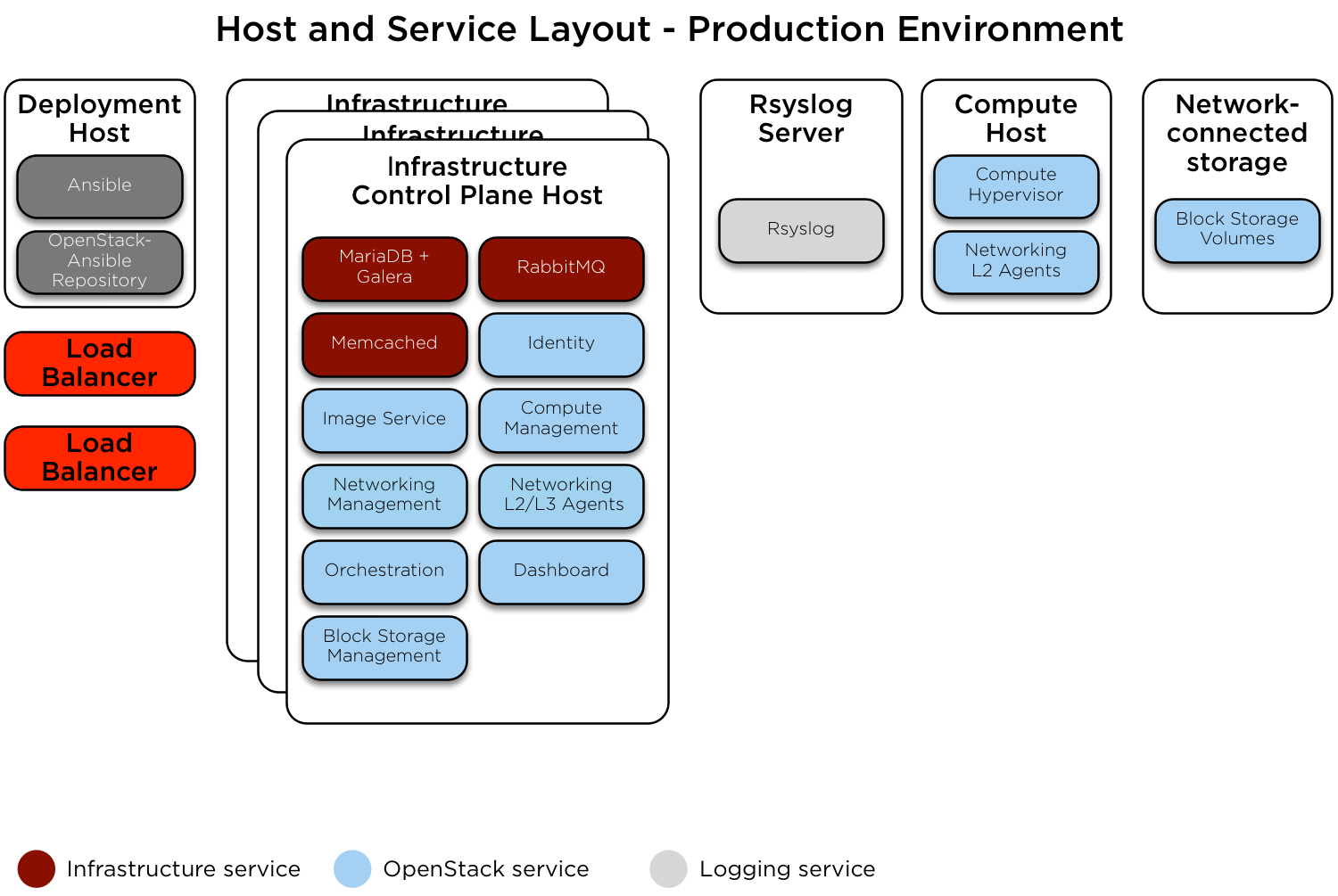

В данной секции описывается пример готового к эксплуатации окружения для рабочего развертывания OpenStack-Ansible (OSA) с высокодоступными сервисами, где сети провайдера и соединение между физическими серверами маршрутизируются (layer 3)

Данное иллюстративное окружение имеет следующие характеристики:

Три инфраструктурных (управляющих) хоста

Два хоста под вычислительные ресурсы

Одно NFS устройство для данных

Один хост для сбора логов

Несколько сетевых карт (NIC), настроенных как агрегированные, для каждого хоста

Полный набор вычислительных сервисов с сервисом Телеметрии (ceilometer), с настроенным NFS, как хранилищем, для сервисов Образов (glance) и Блочного Хранилища (cinder)

Добавлены статические маршруты для связи между управляющей сетью, туннелированной сетью и сетью хранилища данных для каждого блока. Адрес шлюза это первый используемый адрес в каждой подсети.

Настройка сети¶

Распределение сетевых CIDR/VLAN¶

Следующие CIDR назначения используются для данного окружения.

Сеть |

CIDR |

VLAN |

|---|---|---|

Управляющая сеть блока 1 |

172.29.236.0/24 |

10 |

Туннелированная (VXLAN) сеть блока 1 |

172.29.237.0/24 |

30 |

Сеть хранилища данных блока 1 |

172.29.238.0/24 |

20 |

Управляющая сеть блока 2 |

172.29.239.0/24 |

10 |

Туннелированная (VXLAN) сеть блока 2 |

172.29.240.0/24 |

30 |

Сеть хранилища данных блока 2 |

172.29.241.0/24 |

20 |

Управляющая сеть блока 3 |

172.29.242.0/24 |

10 |

Туннелированная (VXLAN) сеть блока 3 |

172.29.243.0/24 |

30 |

Сеть хранилища данных блока 3 |

172.29.244.0/24 |

20 |

Управляющая сеть блока 4 |

172.29.245.0/24 |

10 |

Туннелированная (VXLAN) сеть блока 4 |

172.29.246.0/24 |

30 |

Сеть хранилища данных блока 4 |

172.29.247.0/24 |

20 |

Назначения IP¶

Следующие имена хостов и IP адреса используются для данного окружения.

Имя сервера |

Управляющий IP |

Туннелированный (VXLAN) IP |

IP хранилища данных |

|---|---|---|---|

lb_vip_address |

172.29.236.9 |

||

infra1 |

172.29.236.10 |

172.29.237.10 |

|

infra2 |

172.29.239.10 |

172.29.240.10 |

|

infra3 |

172.29.242.10 |

172.29.243.10 |

|

log1 |

172.29.236.11 |

||

Хранилище NFS |

172.29.244.15 |

||

compute1 |

172.29.245.10 |

172.29.246.10 |

172.29.247.10 |

compute2 |

172.29.245.11 |

172.29.246.11 |

172.29.247.11 |

Настройка сети сервера¶

На каждом сервере должны быть настроены корректные сетевые мосты. Ниже мы приводим файл /etc/network/interfaces для infra1.

Примечание

Если в вашем окружении нет интерфейса eth0, но вместо него используется p1p1, либо же какое-нибудь другое имя интерфейса, убедитесь, что все отсылки к eth0 в конфигурационных файлах заменены на подходящее имя. Это же применимо ко всем дополнительным сетевым интерфейсам.

# This is a multi-NIC bonded configuration to implement the required bridges

# for OpenStack-Ansible. This illustrates the configuration of the first

# Infrastructure host and the IP addresses assigned should be adapted

# for implementation on the other hosts.

#

# After implementing this configuration, the host will need to be

# rebooted.

# Assuming that eth0/1 and eth2/3 are dual port NIC's we pair

# eth0 with eth2 and eth1 with eth3 for increased resiliency

# in the case of one interface card failing.

auto eth0

iface eth0 inet manual

bond-master bond0

bond-primary eth0

auto eth1

iface eth1 inet manual

bond-master bond1

bond-primary eth1

auto eth2

iface eth2 inet manual

bond-master bond0

auto eth3

iface eth3 inet manual

bond-master bond1

# Create a bonded interface. Note that the "bond-slaves" is set to none. This

# is because the bond-master has already been set in the raw interfaces for

# the new bond0.

auto bond0

iface bond0 inet manual

bond-slaves none

bond-mode active-backup

bond-miimon 100

bond-downdelay 200

bond-updelay 200

# This bond will carry VLAN and VXLAN traffic to ensure isolation from

# control plane traffic on bond0.

auto bond1

iface bond1 inet manual

bond-slaves none

bond-mode active-backup

bond-miimon 100

bond-downdelay 250

bond-updelay 250

# Container/Host management VLAN interface

auto bond0.10

iface bond0.10 inet manual

vlan-raw-device bond0

# OpenStack Networking VXLAN (tunnel/overlay) VLAN interface

auto bond1.30

iface bond1.30 inet manual

vlan-raw-device bond1

# Storage network VLAN interface (optional)

auto bond0.20

iface bond0.20 inet manual

vlan-raw-device bond0

# Container/Host management bridge

auto br-mgmt

iface br-mgmt inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.10

address 172.29.236.10

netmask 255.255.255.0

gateway 172.29.236.1

dns-nameservers 8.8.8.8 8.8.4.4

# OpenStack Networking VXLAN (tunnel/overlay) bridge

#

# The COMPUTE, NETWORK and INFRA nodes must have an IP address

# on this bridge.

#

auto br-vxlan

iface br-vxlan inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond1.30

address 172.29.237.10

netmask 255.255.252.0

# OpenStack Networking VLAN bridge

auto br-vlan

iface br-vlan inet manual

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond1

# compute1 Network VLAN bridge

#auto br-vlan

#iface br-vlan inet manual

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

#

# For tenant vlan support, create a veth pair to be used when the neutron

# agent is not containerized on the compute hosts. 'eth12' is the value used on

# the host_bind_override parameter of the br-vlan network section of the

# openstack_user_config example file. The veth peer name must match the value

# specified on the host_bind_override parameter.

#

# When the neutron agent is containerized it will use the container_interface

# value of the br-vlan network, which is also the same 'eth12' value.

#

# Create veth pair, do not abort if already exists

# pre-up ip link add br-vlan-veth type veth peer name eth12 || true

# Set both ends UP

# pre-up ip link set br-vlan-veth up

# pre-up ip link set eth12 up

# Delete veth pair on DOWN

# post-down ip link del br-vlan-veth || true

# bridge_ports bond1 br-vlan-veth

# Storage bridge (optional)

#

# Only the COMPUTE and STORAGE nodes must have an IP address

# on this bridge. When used by infrastructure nodes, the

# IP addresses are assigned to containers which use this

# bridge.

#

auto br-storage

iface br-storage inet manual

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.20

# compute1 Storage bridge

#auto br-storage

#iface br-storage inet static

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

# bridge_ports bond0.20

# address 172.29.247.10

# netmask 255.255.255.0

Настройка развертывания¶

Планировка окружения¶

Файл /etc/openstack_deploy/openstack_user_config.yml определяет, как будет выглядеть окружение.

Для каждого блока должна быть задана группа, содержащая все хосты для данного блока.

С определенными сетями провайдера, address_prefix используется для переопределения префикса ключа, добавленного на каждый хост, который содержит информацию об IP адресе. Это, обычно, должен быть один из container, tunnel или storage. reference_group содержит имя определенной группы и используется для ограничения зоны видимости каждой сети провайдера к данной группе.

Статические маршруты добавлены для коммуникации сетей провайдера между блоками.

Следующая настройка описывает устройство данного окружения.

---

cidr_networks:

pod1_container: 172.29.236.0/24

pod2_container: 172.29.237.0/24

pod3_container: 172.29.238.0/24

pod4_container: 172.29.239.0/24

pod1_tunnel: 172.29.240.0/24

pod2_tunnel: 172.29.241.0/24

pod3_tunnel: 172.29.242.0/24

pod4_tunnel: 172.29.243.0/24

pod1_storage: 172.29.244.0/24

pod2_storage: 172.29.245.0/24

pod3_storage: 172.29.246.0/24

pod4_storage: 172.29.247.0/24

used_ips:

- "172.29.236.1,172.29.236.50"

- "172.29.237.1,172.29.237.50"

- "172.29.238.1,172.29.238.50"

- "172.29.239.1,172.29.239.50"

- "172.29.240.1,172.29.240.50"

- "172.29.241.1,172.29.241.50"

- "172.29.242.1,172.29.242.50"

- "172.29.243.1,172.29.243.50"

- "172.29.244.1,172.29.244.50"

- "172.29.245.1,172.29.245.50"

- "172.29.246.1,172.29.246.50"

- "172.29.247.1,172.29.247.50"

global_overrides:

internal_lb_vip_address: internal-openstack.example.com

#

# The below domain name must resolve to an IP address

# in the CIDR specified in haproxy_keepalived_external_vip_cidr.

# If using different protocols (https/http) for the public/internal

# endpoints the two addresses must be different.

#

external_lb_vip_address: openstack.example.com

management_bridge: "br-mgmt"

provider_networks:

- network:

container_bridge: "br-mgmt"

container_type: "veth"

container_interface: "eth1"

ip_from_q: "pod1_container"

address_prefix: "container"

type: "raw"

group_binds:

- all_containers

- hosts

reference_group: "pod1_hosts"

is_container_address: true

# Containers in pod1 need routes to the container networks of other pods

static_routes:

# Route to container networks

- cidr: 172.29.236.0/22

gateway: 172.29.236.1

- network:

container_bridge: "br-mgmt"

container_type: "veth"

container_interface: "eth1"

ip_from_q: "pod2_container"

address_prefix: "container"

type: "raw"

group_binds:

- all_containers

- hosts

reference_group: "pod2_hosts"

is_container_address: true

# Containers in pod2 need routes to the container networks of other pods

static_routes:

# Route to container networks

- cidr: 172.29.236.0/22

gateway: 172.29.237.1

- network:

container_bridge: "br-mgmt"

container_type: "veth"

container_interface: "eth1"

ip_from_q: "pod3_container"

address_prefix: "container"

type: "raw"

group_binds:

- all_containers

- hosts

reference_group: "pod3_hosts"

is_container_address: true

# Containers in pod3 need routes to the container networks of other pods

static_routes:

# Route to container networks

- cidr: 172.29.236.0/22

gateway: 172.29.238.1

- network:

container_bridge: "br-mgmt"

container_type: "veth"

container_interface: "eth1"

ip_from_q: "pod4_container"

address_prefix: "container"

type: "raw"

group_binds:

- all_containers

- hosts

reference_group: "pod4_hosts"

is_container_address: true

# Containers in pod4 need routes to the container networks of other pods

static_routes:

# Route to container networks

- cidr: 172.29.236.0/22

gateway: 172.29.239.1

- network:

container_bridge: "br-vxlan"

container_type: "veth"

container_interface: "eth10"

ip_from_q: "pod1_tunnel"

address_prefix: "tunnel"

type: "vxlan"

range: "1:1000"

net_name: "vxlan"

group_binds:

- neutron_linuxbridge_agent

reference_group: "pod1_hosts"

# Containers in pod1 need routes to the tunnel networks of other pods

static_routes:

# Route to tunnel networks

- cidr: 172.29.240.0/22

gateway: 172.29.240.1

- network:

container_bridge: "br-vxlan"

container_type: "veth"

container_interface: "eth10"

ip_from_q: "pod2_tunnel"

address_prefix: "tunnel"

type: "vxlan"

range: "1:1000"

net_name: "vxlan"

group_binds:

- neutron_linuxbridge_agent

reference_group: "pod2_hosts"

# Containers in pod2 need routes to the tunnel networks of other pods

static_routes:

# Route to tunnel networks

- cidr: 172.29.240.0/22

gateway: 172.29.241.1

- network:

container_bridge: "br-vxlan"

container_type: "veth"

container_interface: "eth10"

ip_from_q: "pod3_tunnel"

address_prefix: "tunnel"

type: "vxlan"

range: "1:1000"

net_name: "vxlan"

group_binds:

- neutron_linuxbridge_agent

reference_group: "pod3_hosts"

# Containers in pod3 need routes to the tunnel networks of other pods

static_routes:

# Route to tunnel networks

- cidr: 172.29.240.0/22

gateway: 172.29.242.1

- network:

container_bridge: "br-vxlan"

container_type: "veth"

container_interface: "eth10"

ip_from_q: "pod4_tunnel"

address_prefix: "tunnel"

type: "vxlan"

range: "1:1000"

net_name: "vxlan"

group_binds:

- neutron_linuxbridge_agent

reference_group: "pod4_hosts"

# Containers in pod4 need routes to the tunnel networks of other pods

static_routes:

# Route to tunnel networks

- cidr: 172.29.240.0/22

gateway: 172.29.243.1

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth12"

host_bind_override: "eth12"

type: "flat"

net_name: "flat"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-vlan"

container_type: "veth"

container_interface: "eth11"

type: "vlan"

range: "101:200,301:400"

net_name: "vlan"

group_binds:

- neutron_linuxbridge_agent

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "pod1_storage"

address_prefix: "storage"

type: "raw"

group_binds:

- glance_api

- cinder_api

- cinder_volume

- nova_compute

reference_group: "pod1_hosts"

# Containers in pod1 need routes to the storage networks of other pods

static_routes:

# Route to storage networks

- cidr: 172.29.244.0/22

gateway: 172.29.244.1

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "pod2_storage"

address_prefix: "storage"

type: "raw"

group_binds:

- glance_api

- cinder_api

- cinder_volume

- nova_compute

reference_group: "pod2_hosts"

# Containers in pod2 need routes to the storage networks of other pods

static_routes:

# Route to storage networks

- cidr: 172.29.244.0/22

gateway: 172.29.245.1

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "pod3_storage"

address_prefix: "storage"

type: "raw"

group_binds:

- glance_api

- cinder_api

- cinder_volume

- nova_compute

reference_group: "pod3_hosts"

# Containers in pod3 need routes to the storage networks of other pods

static_routes:

# Route to storage networks

- cidr: 172.29.244.0/22

gateway: 172.29.246.1

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "pod4_storage"

address_prefix: "storage"

type: "raw"

group_binds:

- glance_api

- cinder_api

- cinder_volume

- nova_compute

reference_group: "pod4_hosts"

# Containers in pod4 need routes to the storage networks of other pods

static_routes:

# Route to storage networks

- cidr: 172.29.244.0/22

gateway: 172.29.247.1

###

### Infrastructure

###

pod1_hosts:

infra1:

ip: 172.29.236.10

log1:

ip: 172.29.236.11

pod2_hosts:

infra2:

ip: 172.29.239.10

pod3_hosts:

infra3:

ip: 172.29.242.10

pod4_hosts:

compute1:

ip: 172.29.245.10

compute2:

ip: 172.29.245.11

# galera, memcache, rabbitmq, utility

shared-infra_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# repository (apt cache, python packages, etc)

repo-infra_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# load balancer

# Ideally the load balancer should not use the Infrastructure hosts.

# Dedicated hardware is best for improved performance and security.

haproxy_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# rsyslog server

log_hosts:

log1:

ip: 172.29.236.11

###

### OpenStack

###

# keystone

identity_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# cinder api services

storage-infra_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# glance

# The settings here are repeated for each infra host.

# They could instead be applied as global settings in

# user_variables, but are left here to illustrate that

# each container could have different storage targets.

image_hosts:

infra1:

ip: 172.29.236.11

container_vars:

limit_container_types: glance

glance_nfs_client:

- server: "172.29.244.15"

remote_path: "/images"

local_path: "/var/lib/glance/images"

type: "nfs"

options: "_netdev,auto"

infra2:

ip: 172.29.236.12

container_vars:

limit_container_types: glance

glance_nfs_client:

- server: "172.29.244.15"

remote_path: "/images"

local_path: "/var/lib/glance/images"

type: "nfs"

options: "_netdev,auto"

infra3:

ip: 172.29.236.13

container_vars:

limit_container_types: glance

glance_nfs_client:

- server: "172.29.244.15"

remote_path: "/images"

local_path: "/var/lib/glance/images"

type: "nfs"

options: "_netdev,auto"

# nova api, conductor, etc services

compute-infra_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# heat

orchestration_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# horizon

dashboard_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# neutron server, agents (L3, etc)

network_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# ceilometer (telemetry data collection)

metering-infra_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# aodh (telemetry alarm service)

metering-alarm_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# gnocchi (telemetry metrics storage)

metrics_hosts:

infra1:

ip: 172.29.236.10

infra2:

ip: 172.29.239.10

infra3:

ip: 172.29.242.10

# nova hypervisors

compute_hosts:

compute1:

ip: 172.29.245.10

compute2:

ip: 172.29.245.11

# ceilometer compute agent (telemetry data collection)

metering-compute_hosts:

compute1:

ip: 172.29.245.10

compute2:

ip: 172.29.245.11

# cinder volume hosts (NFS-backed)

# The settings here are repeated for each infra host.

# They could instead be applied as global settings in

# user_variables, but are left here to illustrate that

# each container could have different storage targets.

storage_hosts:

infra1:

ip: 172.29.236.11

container_vars:

cinder_backends:

limit_container_types: cinder_volume

nfs_volume:

volume_backend_name: NFS_VOLUME1

volume_driver: cinder.volume.drivers.nfs.NfsDriver

nfs_mount_options: "rsize=65535,wsize=65535,timeo=1200,actimeo=120"

nfs_shares_config: /etc/cinder/nfs_shares

shares:

- ip: "172.29.244.15"

share: "/vol/cinder"

infra2:

ip: 172.29.236.12

container_vars:

cinder_backends:

limit_container_types: cinder_volume

nfs_volume:

volume_backend_name: NFS_VOLUME1

volume_driver: cinder.volume.drivers.nfs.NfsDriver

nfs_mount_options: "rsize=65535,wsize=65535,timeo=1200,actimeo=120"

nfs_shares_config: /etc/cinder/nfs_shares

shares:

- ip: "172.29.244.15"

share: "/vol/cinder"

infra3:

ip: 172.29.236.13

container_vars:

cinder_backends:

limit_container_types: cinder_volume

nfs_volume:

volume_backend_name: NFS_VOLUME1

volume_driver: cinder.volume.drivers.nfs.NfsDriver

nfs_mount_options: "rsize=65535,wsize=65535,timeo=1200,actimeo=120"

nfs_shares_config: /etc/cinder/nfs_shares

shares:

- ip: "172.29.244.15"

share: "/vol/cinder"

Индивидуальная настройка окружения¶

Опционально размещаемые файлы в /etc/openstack_deploy/env.d позволяют модифицировать группы Ansible. Это позволяет оператору задать, будут ли сервисы запущены в контейнере (по умолчанию), либо же на сервере (без контейнера).

Для данного окружения, cinder-volume работает в контейнере на инфраструктурных хостах. Что бы сделать это, создайте файл /etc/openstack_deploy/env.d/cinder.yml со следующим содержанием:

---

# This file contains an example to show how to set

# the cinder-volume service to run in a container.

#

# Important note:

# When using LVM or any iSCSI-based cinder backends, such as NetApp with

# iSCSI protocol, the cinder-volume service *must* run on metal.

# Reference: https://bugs.launchpad.net/ubuntu/+source/lxc/+bug/1226855

container_skel:

cinder_volumes_container:

properties:

is_metal: false

Пользовательские переменные¶

В файле /etc/openstack_deploy/user_variables.yml расположены глобальные переопределения стандартных переменных.

В данном окружении распределитель нагрузки размещен на инфраструктурных хостах. Убедитесь, что keepalived также настроен совместно с HAProxy, разместив следующее содержание в файле /etc/openstack_deploy/user_variables.yml .

---

# This file contains an example of the global variable overrides

# which may need to be set for a production environment.

## Load Balancer Configuration (haproxy/keepalived)

haproxy_keepalived_external_vip_cidr: "<external_vip_address>/<netmask>"

haproxy_keepalived_internal_vip_cidr: "172.29.236.9/32"

haproxy_keepalived_external_interface: ens2

haproxy_keepalived_internal_interface: br-mgmt